twobee - Fotolia

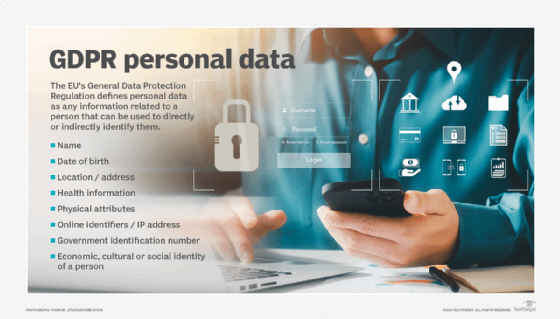

GDPR regulations put premium on transparent AI

As the EU's GDPR regulations go into effect, enterprises must focus on building transparency in AI applications so that algorithms' decisions can be explained.

The European Union's new GDPR regulations could shake up the way enterprises craft algorithms to make decisions, particularly when it comes to building transparent AI applications.

"GDPR will impact all industries, and has particularly relevant ramifications for AI developers and AI-enabled businesses," said Dillon Erb, CEO at Paperspace Co., an AI cloud provider.

The explainability of AI is a hot topic, especially for deep learning and opaque machine learning, said Will Knight, senior editor for AI at MIT Technology Review. The bulk of the GDPR legislation focuses on ensuring that enterprises acquire consent from users before processing their data, but there are also aspects of the law that grant individuals the right to know how a business made a decision using their data. This applies even when an enterprise has legitimate access to that personal data.

"It is not possible, nor is it legal, to tell a customer that their financial transaction was declined simply because the model said so," said Chad Meley, vice president of marketing at Teradata.

Impact of transparent AI

These requirements could affect the types of AI algorithms used by enterprises, the training data used to build them and the process of documenting the development process. Enterprises will also have to ensure that humans are involved in automated decisions.

Recital 71, a companion document to GDPR, states that enterprises must be able to explain how algorithms are used to make decisions. It specifically mentions being able to explain, "the existence of automated decision-making, and, at least in those cases, meaningful information about the logic involved, as well as the significance and the envisaged consequences of such processing for the data subject." Companies will have to figure out how to translate the complicated reasoning within AI pipelines into simple language.

"The challenge is that the GDPR requirements do not define what is a satisfying explanation or how enterprises must determine these explanations," said Vikram Mahidhar, AI growth leader at professional services firm Genpact. "Still, even without this pending EU regulation, the responsible use of AI is a concern every company needs to consider."

When is transparency in AI necessary?

This need to explain AI-powered decisions will apply primarily to decisions that have a significant impact on a person's life. Applications like lead scoring, product recommendation engines and fraud detection could fall outside the rubric.

Enforcement is likely to be driven by complaints and requests in response to consumer inquiries, such as:

- Why are my insurance rates higher than my neighbor's?

- Why was I denied a loan or credit card?

- Why was my job application rejected?

- Why was a medical treatment rejected?

Model interpretability is a key challenge because deep learning techniques make it difficult to explain how a model arrived at a conclusion -- the information it uses to make decisions is hidden away quite literally in hidden layers. While a model's output may occasionally seem self-explanatory, such as when algorithms correctly identify images, in other cases, it may be completely opaque.

"The ability to explain these models is often an imperative, especially in cases where there are laws that prevent decisions based on data that can be considered discriminatory -- for example, approving or denying a loan -- or where there is significant exposure to litigation -- for example, medical diagnosis," Meley said.

Researchers work to improve model interpretability

Though far from solved, there are several approaches that enterprises are using to address the problem of transparent AI. One involves a method called LIME (Local Interpretable Model-Agnostic Explanations), an open source body of research produced by the University of Washington. LIME sheds light on the specific variables that triggered the algorithm at the point of its decision and produces that information in a readable report.

Another example is DARPA's Explainable Artificial Intelligence initiative, which increases transparent AI by introducing explainable models, techniques and interfaces with which users can interact.

In the case of fraud, knowing this information can provide security from a regulatory standpoint, as well as help the business understand how and why the fraud is happening, according to Meley.

Understand your data's behavior

Data engineers need to evaluate the behavior of the data that moves through AI pipelines. Some AI approaches, like natural language understanding, and particularly computational linguistics, can be implemented in a way that ensures there is traceability that tracks and provides transparency into recommendations machines make.

Yet, tracking and tracing these decisions often isn't enough. Enterprises need a more comprehensive governance structure with more advanced technologies, like neural networks that do not permit traceability.

"The key is to fully understand your data's behavior," Genpact's Mahidhar said.

It's not just about implementing AI algorithms; it's about building them with effective data engineering in the first place.

Best practices for building transparent AI applications include documenting assumptions about the completeness of the data, addressing data biases and reviewing the new rules identified by the machine before implementation.

If you use machine learning to identify anomalies, you can put checks and balances in place to manually test and determine if the results make sense, Mahidhar said. When designing and testing AI, it is also important to involve people with a detailed understanding of the processes and industry issues. Human domain expertise is essential.

Algorithmic tradeoffs

Companies should also consider when to use what AI technology for which processes. For example, neural networks are not appropriate for functions where traceability is critical. Yet, sometimes performance and speed may take precedence over detailed tracking of AI decisions, Mahidhar said.

For example, if a bank suspects money laundering, it can use neural networks as advanced augmented intelligence to quickly analyze millions of data points and to connect the dots much faster than humanly possible, narrowing the field for human analysis.

"Companies may have to trade off performance over explainability," said Venkat Ramasamy, COO of CodeLathe, a data management service.

For example, data scientists might identify one model that has 95% accuracy but no explainability, and another with 90% accuracy but good explainability. Managers and data protection officers will need to set policies to address these tradeoffs and ensure these policies are followed across the AI development pipeline.

Document everything

Another component of GDPR relevant to AI and machine learning is that no individual should be subject to a decision based solely on automated processing.

"On the surface, this would seem to preclude the use of any AI and machine learning for the processing of personal data," said Derek Slager, CTO and co-founder of Amperity, a customer data platform that uses machine learning for customer segmenting. "However, a deeper look reveals this is not the case."

Data scientists might argue that the use of AI requires training, tuning and care, and feeding to be truly effective, and that the model must be designed, implemented and operated by people. Documenting the process by which this occurs so that it is easily explainable to a regulator will be critical in compliance efforts.

"We are working to add transparency and reproducibility to the machine learning and AI pipeline, and expect this to become more relevant than ever as businesses work to become compliant," Paperspace's Erb said. This involves knowing exactly what data produces certain predictions and understanding how these predictions perform in practice with real-world data vs. training data sets.