Expanding explainable AI examples key for the industry

Improving AI explainability and interpretability are keys to building consumer trust and furthering the technology's success.

AI systems have tremendous potential, but the average user has little visibility and knowledge on how the machines make their decisions. AI explainability can build trust and further push the capabilities and adoption of the technology.

When humans make a decision, they can generally explain how they came to their choice. But with many AI algorithms, an answer is provided without any specific reason. This is a problem.

AI explainability is a big topic in the tech world right now, and experts have been working to create ways for machines to start explaining what they are doing. In addition, experts have identified key explainable AI examples and methods to help create transparent AI models.

What is AI explainability?

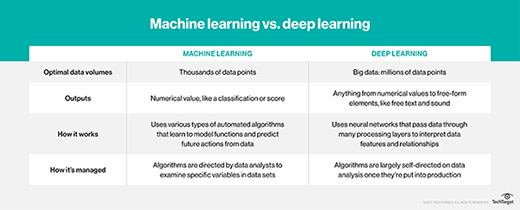

Determining how a deep learning or machine learning model works isn't as simple as lifting the hood and looking at the programming. For most AI algorithms and models, especially ones using deep learning neural networks, it is not immediately apparent how the model came to its decision.

AI models can be in positions of great responsibility, such as when they are used in autonomous vehicles or when assisting the hiring process. As a result, users demand clear explanations and information about how these models come to decisions.

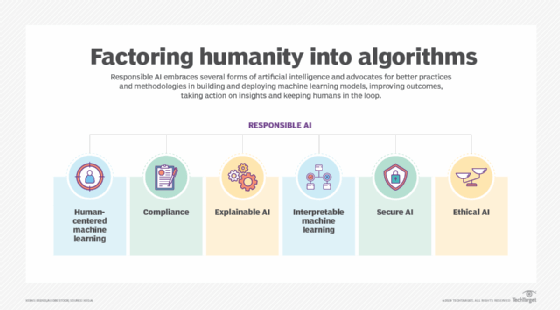

Explainable AI, also referred to as XAI, is an emerging field in machine learning that aims to address how decisions of AI systems are made. This area inspects and tries to understand the steps involved in the AI model making decisions. There have been attempts by many in the research community and, in particular the U.S. Defense Advanced Research Projects Agency (DARPA), to improve the understanding of these models. DARPA is pursuing efforts to produce explainable AI through numerous funded research initiatives and many companies helping to bring explainability to AI.

Why explainability is important

For certain explainable AI examples and use cases, describing the decision-making process the AI system went through isn't pressing. But when it comes to autonomous vehicles and making decisions that could save or threaten a person's life, the need to understand the rationale behind the AI is heightened.

On top of knowing that the logic used is sound, it is also important to know that the AI is completing its tasks safely and in compliance with laws and regulations. This is especially important in heavily regulated industries such as insurance, banking and healthcare. If an incident does happen, the humans involved need to understand why and how that incident happened.

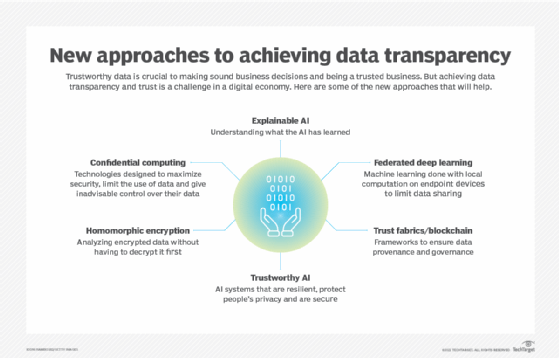

Behind the desire for a better understanding of AI is the need to trust people's systems. For artificial intelligence and machine learning to be useful, there must be trust. To earn that trust, there needs to be a way to understand how these intelligence machines make decisions. The challenge is that some of the technologies adopted for AI are not transparent and, therefore, make it difficult to fully trust the decisions, especially when humans are only operating in a limited capacity or completely removed from the loop.

We also want to make sure that AI made fair and unbiased decisions. There have been numerous examples where AI systems have been in the news for biased decision-making processes. In one example of this, AI created to determine the likelihood of a criminal reoffending was biased toward people of color. Identifying this kind of bias in both the data and the AI model is essential to creating models that perform as expected.

The difficulty of building explainable AI

Today, numerous AI algorithms lack explainability and transparency. Some algorithms, such as decision trees, can be examined by humans and understood. However, the more sophisticated and powerful neural network algorithms, such as deep learning, are much opaquer and more difficult to interpret.

These popular and successful algorithms have resulted in potent capabilities for AI and machine learning; however, the result is systems that are not easily understood. Relying on black box technology can be dangerous.

But explainability isn't as easy as it sounds. The more complicated a system gets, the more that system is making connections between different pieces of data. For example, when a system is doing facial recognition, it's matching an image to a person. But the system can't explain how the image bits are mapped to that person because of the complex set of connections.

How to create explainable AI

There are two main ways to provide explainable AI. The first is to use machine learning approaches that are inherently explainable, such as decision trees, or Bayesian classifiers or other explainable approaches. These have certain amounts of traceability and transparency in their decision-making, which can provide the visibility needed for critical AI systems without sacrificing performance or accuracy.

The second is to develop new approaches to explain more complicated, but sophisticated, neural networks. Researchers and institutions, such as DARPA, are currently working to create methods for explaining these more complicated machine learning methods. However, progress in this area has been slow going.

When considering explainable AI, organizations must determine who needs to understand their deep learning or machine learning models. Should feature engineers, data scientists and programmers understand the models, or do business users need to understand them as well?

By deciding who should understand an AI model, organizations can decide the language used to provide an explanation behind a model's decisions. For example, is it in a programming language, or in plain English? If opting for a programming language, a business might need a process to translate the output of their explainability methods into explanations a business user, or a non-technical user, can understand.

The more that AI is a part of our everyday lives, the more we need these black box algorithms to be transparent. Having trustworthy, reliable and explainable AI without sacrificing performance or sophistication is a must. There are several good examples of tools to help with AI explainability, including vendor offerings and open source options.

Some organizations, such as Advanced Technology Academic Research Center, are working on transparency assessments. This self-assessed multifactor score considers various factors such as algorithm explainability, identification of data sources used for training and methods used for data collection.

By taking these factors into account, people can self-assess their models. While not perfect, it's a necessary starting point to allow others to gain insights into what's going on behind the scenes. First and foremost, making AI trustworthy and explainable is essential.