A look at the leading artificial intelligence infrastructure products

The artificial intelligence infrastructure market is young and varied, with enterprise AI vendors offering everything from cloud services to powerful, and expensive, hardware.

Companies looking to build artificial intelligence infrastructures have many options, with tech vendors offering a range of products and services as wide as it is varied. Each product can help organizations build and maintain IT infrastructures to support AI applications.

This roundup of vendor offerings provides a quick snapshot of the types of products companies need to create an effective artificial intelligence infrastructure and gain the most value from their AI initiatives.

Using extensive research into the artificial intelligence infrastructure market, TechTarget editors focused on both market-leading and well-established enterprise vendors. The research included data from TechTarget surveys, as well as reports from other respected research firms, including Gartner.

Amazon

AWS supports a number of artificial intelligence services for its cloud infrastructure platform. One notable offering is the machine learning service SageMaker. SageMaker is a fully managed service designed to enable developers and data scientists to quickly and easily build, train and deploy machine learning models at any scale, with independent or interdependent modules to do so.

Amazon SageMaker includes hosted Jupyter notebooks that enable users to explore and visualize training data stored in Amazon Simple Storage Service (S3). They can connect directly to data in S3, or use AWS Glue to move AI data from Amazon Relational Database Service, Amazon DynamoDB and Amazon Redshift into S3 for analysis in a notebook.

To help select an algorithm, SageMaker includes the 12 most common machine learning algorithms preinstalled. The service also comes preconfigured to run TensorFlow and Apache MXNet, two of the most popular open source frameworks. Organizations also have the option of using their own frameworks.

Among the use cases for this service are ad targeting, credit default prediction, industrial internet of things, and supply chain and demand forecasting.

Amazon offers a variety of pricing models for SageMaker based on use.

Baidu Inc.

Chinese internet search company Baidu opens up its AI capabilities to third-party developers through its ai.baidu.com open platform, which offers APIs and software development kits for image recognition, speech recognition, natural language processing, etc. Most of these are free of charge, according to the company.

Baidu's DeepBench is an open source benchmarking tool that measures the performance of basic operations involved in training deep neural networks and inference, according to the company. These operations execute on different hardware platforms using neural network libraries.

DeepBench, available as a repository on GitHub, attempts to answer the question of which hardware provides the best performance for the basic operations used for deep neural networks.

Cloudera Inc.

Cloudera Altus is a machine learning and analytics PaaS tool built with a shared AI data catalog and data context.

Altus includes tools for data engineering, SQL and BI analytics. The company plans to add data science tools, as well. Altus has the ability to deliver a shared data experience (SDX) across a variety of data analysis functions, according to Cloudera. That means there is one trusted source for metadata, including schema, security and governance policies, for all the machine learning and analytics services.

Cloudera intends for Altus with SDX to enable self-service without fear of analytics sprawl, and to provide business users with on-demand tools to develop complex, data-driven applications.

Cloudera Altus is priced based on a managed cloud service usage charge of $0.08 per hour for data engineering.

Confluent Inc.

The Confluent Platform, based on Apache Kafka, is a streaming platform for enterprises looking to glean value from AI data. As a streaming platform that spans to the edges of an organization and captures data in streams of real-time events, businesses in industries such as retail, logistics, manufacturing, financial services, technology and media can use the Confluent Platform to respond in real time to customer events, transactions, user experiences and market movements -- whether on premises or in the cloud.

The platform acts as a central nervous system that enables companies to build scalable, real-time applications for activities such as fraud detection, customer experience and predictive maintenance. For these particular use cases, Confluent distinguishes itself by processing data while it is in motion, enabling real-time rather than reactionary handling.

Confluent Enterprise is available with a subscription.

Databricks

Founded by the team that created Apache Spark, the very active open source project in the big data ecosystem, Databricks provides a unified analytics platform that consolidates data science and engineering in one workflow to help data professionals bridge the gap between raw data and analytics.

The Databricks platform powers Spark applications to offer a production environment in the cloud via a collaborative and integrated environment. Databricks democratizes and streamlines the process of exploring data, prototyping and putting data-driven applications into operation.

Pricing for the Databricks platform varies depending on the cloud service provider, the type of workloads and the features/capabilities.

Google touts its Cloud Machine Learning (ML) Engine as a managed service enabling data scientists and developers to build and bring into production machine learning models. Google couples it with prediction and training services.

The service combines the managed infrastructure of Google Cloud Platform with TensorFlow, an open-source software library for dataflow programming across a range of tasks. With the service, organizations can run TensorFlow applications in the cloud and train machine learning models at scale. In addition, they can host the models in the cloud for new data predictions.

Cloud ML Engine manages the computing resources that a training job needs to run, so companies can focus more on their models than on hardware configurations or resource management, according to Google.

A number of components make up Cloud ML Engine, including REST API, the core of the engine that’s a set of RESTful services to manage jobs, models, and versions, and make predictions on hosted models on Google Cloud Platform; gcloud command-line tool, which companies can use to manage models and versions, and request predictions; and Google Cloud Platform Console, which also enables management of models and versions, and provides a graphical interface for working with machine learning resources.

Another key piece of Cloud ML Engine is Cloud Datalab, the interactive computational environment that provides the ability to interactively visualize data.

In addition, Google offers Cloud Tensor Processing Units machine learning accelerators, which are still in beta as of this writing, as well as Cloud AutoML, which is in alpha.

Hortonworks Inc.

Hortonworks Data Platform (HDP) is an enterprise-ready, open source big data distribution platform. HDP addresses a range of data at rest use cases, including supporting real-time customer applications and providing analytics.

The platform includes data processing engines that support SQL, real-time streaming, data science and AI use cases, among others. Organizations can use Apache Spark as part of HDP for text analytics, image detection, recommendation systems, outlier detection, cohort/clustering analysis and real-time network analysis, among other use cases.

HDP is also certified to work with the IBM Data Science Experience (DSX), an enterprise AI platform for data scientists that quickly deploys models at scale. The intention of DSX is to facilitate collaboration and to utilize open source tools in an enterprise-grade environment.

DSX offers a suite of open source data science tools, such as RStudio, Apache Spark, Jupyter and Apache Zeppelin notebooks, all of which are integrated into the platform.

IBM

The IBM Power Systems Accelerated Compute Server, the AC922, delivers performance for AI, modern high-performance computing and data analytics workloads.

At the heart of the AC922 is the new IBM Power9 CPU, which provides I/O technology with PCIe gen 4, OpenCapi and next-generation Nvidia NVLink. This enables 9.5 times faster AI data transfer from processor to accelerator compared against PCIe gen 3 based x86 systems, according to IBM..

The Power9 supports up to 5.6 times more I/O and two times more threads than x86 alternatives. It is available for configurations with anywhere between 16 and 44 cores in the AC922 server.

The server is the backbone of the Coral supercomputer, which is on track to provide 200-plus petaflops of computing and three exaflops of AI-as-a-service performance. Enterprises can use the server to deploy data-intensive workloads, such as deep learning frameworks and accelerated databases.

Informatica LLC

The Informatica Intelligent Data Platform, which runs on Informatica's Claire engine, provides a data management foundation for enterprise artificial intelligence infrastructures.

Claire is an AI-driven engine combining metadata, AI and machine learning to make intelligent recommendations and to automate the development and monitoring of data management projects. It drives the intelligence of all the Intelligent Data Platform capabilities using machine learning to detect similar data across thousands of databases and file sets, find data quality anomalies, identify data structures, predict and resolve performance issues, and more.

For example, Claire uses intelligent data to discover data, detect duplicates, combine individual data fields into business entities, propagate tags across data sets and recommend data sets to users. Claire can automatically deduce data domains, such as people, products, codes, hire dates, locations and contact information, across databases and unstructured files, and it can identify an entity, such as a purchase order or health record, made up of a collection of data domains.

Informatica does not provide pricing information.

Information Builders

Information Builders iWay 8's secure, scalable environment supports microservices, big data and other data integration strategies. It provides support for applications and lays the foundation for a service- and event-oriented blockchain architecture.

In environments with sensory or other streaming data, iWay's data management technology natively ingests, cleanses, unifies and integrates big data with other information sources, providing organizations with contextual intelligence that lends itself to actionable insights.

The iWay Service Manager integrates into existing operations infrastructures to merge business data. It also enables access to new AI data types and sources by integrating applications, services and APIs without the need for coding. Developers can reuse any applicable configuration component, from a simple connection definition to the entire business logic.

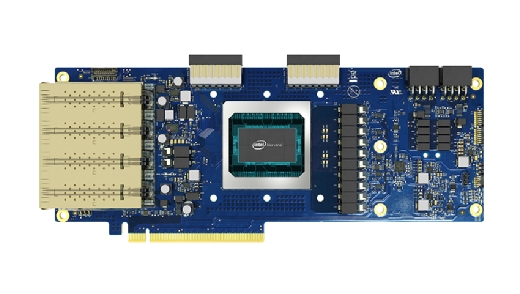

Intel

Intel claims it designed the Nervana Neural Network Processor specifically for neural networks with the goal of providing enterprises with the flexibility they need to support deep learning, while also making core hardware components as efficient as possible.

The new processor gives software the flexibility to directly manage data locally, both within the processing elements and in high-bandwidth memory (HBM) itself, according to Intel.

Tensors can be split across HBM modules in order to ensure that in-memory data is always closest to the relevant compute elements. HBM supports up to 1 TB of bandwidth between the compute elements and the external memory. The software can determine which blocks of data are stored inside the processing elements long term, thus saving power by reducing data movement to and from external memory.

Microsoft

Through Azure, Microsoft provides an AI platform, including tools, services and artificial intelligence infrastructure, for developers, whether the developers are modernizing existing applications or looking for advanced capabilities to develop new AI services.

The vendor's prebuilt AI products, including Microsoft Cognitive Services, are a collection of intelligent APIs for developers to include vision, speech, language, search and knowledge technologies in their apps with minimal code. As no data science modeling is required, the apps can perform tasks such as communicating with users in natural language, identifying relevant content in images and recognizing users by voice.

In the conversational AI space, Azure Bot Service is a platform integrated into the Azure Platform to build relatively natural human and computer interactions.

Azure Machine Learning offers a cloud service for data scientists to create custom AI and machine learning models.

Nvidia Corp.

Nvidia's DGX Systems, based on the company's Volta GPU platform, consists of the DGX-1, DGX-2 and DGX Station. It also includes GPU Cloud, NVIDIA's catalog of containers, deep learning software, high-performance computing and partner apps, and visualization tools. Nvidia claims its DGX Systems "are designed to give data scientists the most powerful tools for AI exploration."

The DGX-1 and DGX-2 are supercomputers built for AI and machine learning. The DGX-2 is the more powerful of the two, with what Nvidia claims is 10 times the performance of DGX-1. It weighs 350 pounds, costs $399,000 and features 16 Nvidia Tesla GPUs over two boards, combining for 512 GB of second-generation high-bandwidth memory (HBM2).

Other specs include 1.5 TB of standard RAM, two Intel Xeon Platinum CPUs and 30 TB of NVMe solid-state drive (SSD) internal storage. In terms of networking, the DGX-2 has 12 total NVSwitches, with 2.4 TB per second bisection bandwidth and dual 10 and 25 GbE.

The smaller DGX-1 resembles a server rack and weighs 134 pounds. It costs $149,000 and features eight Nvidia Tesla V100 GPUs, 256 GB of GPU memory, 512 GB of standard RAM memory, two Intel Xeon E5-2698 v4 -- 20 core -- processors, and four 1.92 TB SSDs.

DGX Station is the desktop version of the DGX-1 and DGX-2, which Nvidia brands as the "world's first personal supercomputer for leading-edge AI development." It has four Tesla V100 GPUs with 64 GB of HBM2 memory, weighs 88 pounds and costs $49,900 as of this writing.

Oracle

Oracle has engineered Oracle Cloud to streamline model training by utilizing customers' essential enterprise data and operating on a high-speed network, in object storage and in GPUs. Oracle AI Platform Cloud instances come preinstalled with familiar AI libraries, tools and deep learning frameworks. Machine learning practitioners also have access to Oracle Object Storage and can connect to existing Spark/Hadoop clusters.

Oracle's autonomous capabilities are integral to the entire Oracle Cloud Platform, including what the company calls the world's first autonomous database. The Oracle Autonomous Database uses advanced AI and machine learning to eliminate human labor, human error and manual tuning, delivering increased availability and performance at a lower cost.

The Oracle AI Platform Cloud Service runs on the Oracle Cloud Infrastructure to administer specialized deep learning applications. The differentiated artificial intelligence infrastructure layer includes NVMe flash storage and GPUs on a 25 Gb network.

Oracle delivers AI capabilities to customers either in the public cloud on a subscription basis or on premises via traditional licensing.

Qubole Inc.

Qubole's cloud-native Big Data Activation Platform enables organizations to activate petabytes of AI data faster while continuously lowering costs.

Data scientists can use the platform to develop, test and deploy AI applications through a common, notebook-style user interface. The company says it provides a way to avoid legacy system lock-in, enabling organizations to use any engines, notebooks and cloud services that meet their needs.

Data scientists, data engineers and data analysts can use the platform for use cases including extract, transform and load/reporting, ad hoc queries, machine learning, and stream processing. It works with cloud offerings including AWS, Microsoft Azure, Oracle Bare Metal Cloud and Google Cloud.

The platform's security features include compliance and privacy controls, flexible and granular access controls, built-in encryption and key management, and manageable data controls and configuration.

Qubole offers a combination of pricing packages based on usage.

Skymind

The Skymind Intelligence Layer (SKIL) is enterprise AI software that helps data scientists and data engineers build, deploy and monitor AI applications within production environments.

Intuitively, SKIL bridges the gap between the Python data science ecosystem -- TensorFlow and Keras -- and the Java big data ecosystem -- Hadoop and Spark. With the ability to import pretrained Python models, distribute training across clusters, invoke models in batch or real-time, and monitor model performance, SKIL aims to be a cross-team platform for data scientists, data engineers and DevOps/IT.

SKIL integrates with Hadoop and Spark for use in business environments on distributed GPUs and CPUs on premises, in the cloud or in a hybrid environment.

The SKIL starter plan begins at $60,000 for a one-year license and a year of enterprise support.

SAP

The SAP Leonardo Machine Learning Foundation is a central hub for machine earning within SAP's Cloud Platform. It enables organizations to build their own models through a bring-your-own-model capability, to retrain prebuilt models or to run pretrained machine learning algorithms that cover a variety of standard business needs. Companies can use the SAP Leonardo Machine Learning Foundation with SAP products or in other areas of the business.

Leonardo Machine Learning offers AI and machine learning capabilities that integrate with existing business processes. Machine learning capabilities are available to customers in three different ways: as a platform through SAP Leonardo Machine Learning Foundation, embedded into SAP products or as stand-alone products.

SAP Leonardo also has a few select machine learning capabilities that the company sells as stand-alone products based on usage. One example is SAP Brand Impact, which enables brands to automatically detect their logo in live video feeds to measure and track exposure in real time.

SAP also makes its AI offerings available, specifically natural language processing, in the form of digital assistants and chatbots. All these varieties offer machine learning capabilities.

SAP says it is working to embed machine learning into all of its existing product areas, and it adds new capabilities with each update and release. Some of these new developments are available at no additional cost to existing SAP customers, whereas other sets of capabilities are available for an additional fee.

SAP Leonardo Machine Learning Foundation billing is based on node per hour usage.

SAS Institute Inc.

SAS Data Management integrates, cleanses, migrates and improves data across platforms to produce consistent and accurate information. The software also has inherent AI capabilities, including a comprehensive natural language processing system, fuzzy logic approaches to address inexact reasoning and a neural network to understand data patterns.

In addition, the metadata management and lineage functions of SAS Data Management intelligently trace the evolution of data elements across an organization. This process ensures the data is trusted for AI functions in an automated, reusable and sharable environment.

Another product, SAS Event Stream Processing, analyzes high-velocity big data while it's still in motion so users can take immediate action. It can quickly normalize the data, identify patterns, look for unusual conditions, utilize predictive models, apply machine learning, and initiate a call to action or kick off another business process.

SAS Event Stream Processing can manage any amount of data at any speed from multiple AI data sources all in a single interface. With this type of capability, a manufacturer, for example, could know that a machine is going to break before it does, or a grocery store could know that a refrigeration unit is going to break before it breaks.

Pricing for both products is based on capacity.

Talend Inc.

Talend Data Fabric is a cloud and big data integration platform designed to improve IT agility while accelerating company-wide business insight.

Based on open technologies, Talend Data Fabric is a unified set of enterprise AI tools for a range of integration styles: real-time or batch, big data integration, application integration, data quality, data preparation, data stewardship or master data management, on premises or in the cloud. It provides support for self-service integration, machine learning and collaborative data governance.

Talend Data Fabric provides more than 900 prebuilt connectors and components to leading databases, big data and NoSQL technologies, cloud platforms -- AWS, Azure, Google, Snowflake -- SaaS applications, messaging systems and more. The product runs on Linux, Solaris, macOS and Windows.

Pricing is based on the number of developers and includes unlimited connectors.